Are Query Plans All We Need?

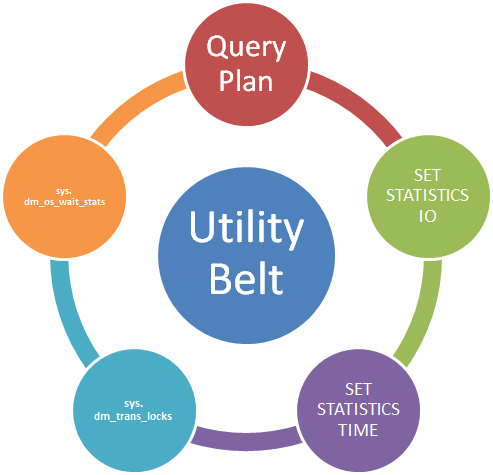

In How Can Query Plans Help Us?, we looked at the nature of T-SQL and then at some of the benefits that understanding query plans can bring us. But are query plans a silver bullet? Can we overcome all optimization challenges with query plans only or are there other tools we need on our utility belt?

Spoiler Alert: Yes, we need more stuff.

What’s Missing?

While query plans provide a wealth of useful performance information, they are neither a complete nor the only source of that information. There are still a number of things a query plan does not tell us.

For example, a query plan does not:

- Tell us about the types and number of locks acquired.

- Tell us about the wait statistics for a query.

- Tell us whether data is taken from cache or from disk.

- Tell us what the actual cost is and how it compares against the estimated cost.

What Complements Are There?

In addition to the query plan, there are a number of first-approach stethoscope-like instruments we should always keep in our utility belt when we are troubleshooting troublesome queries. These work in tandem with the query plan because they provide us with information the query plan does not.

Why don’t we take a quick look at each of them?

SET STATISTICS IO

This SET option, when turned on, makes SQL Server display useful statistical information about the disk activity generated by our queries.

For each single table in a T-SQL statement, SET STATISTICS IO provides these important bits of data:

- Number of seeks and scans required by the query.

- Number of logical reads.

- Number of physical reads.

- Number of read-ahead reads (pre-emptive cache).

- Number of large object logical reads.

- Number of large object physical reads.

- Number of large object read-ahead reads (pre-emptive cache).

You could say if there is one thing this SET option provides, it is numbers.

By using this SET option, we can quickly take a look at whether a query shows an unusually high number of scans or logical reads on a particular table. This can imply an inefficient query plan, possibly due to lack of useful indexing on that particular table.

Due to its instant finger-pointing abilities, STATISTICS IO shows its value at the early stages of troubleshooting a problematic query.

SET STATISTICS TIME

This SET option, when turned on, makes SQL Server display metrics about the time required to:

- Parse each statement.

- Compile each statement.

- Execute each statement.

STATISTICS TIME can help us identify if an apparently slow query is being so due to parsing and compilation time instead of execution time. With very complex T-SQL code, it is possible to end up with a compilation and optimization time similar or even greater than the execution time itself. This can strike as unusual in OLTP scenarios, because to the typical simplicity of operational queries. However, it can be a common occurrence in unoptimized OLAP environments, because of frequent complexity of reporting queries.

Because of its ability to compare compilation time against execution time, STATISTICS TIME, like STATISTICS IO show its value when used early while troubleshooting a complex OLAP query.

sys.dm_tran_locks

This management view gives us information about the currently active lock requests in SQL Server. It tells us what resources have locks on them, what type of lock it is and who requested it. This can help us to check whether an overwhelming number of low-level locks is being issued when a few higher-level locks would suffice. We can therefore use this as an indicator of whether it is worth tweaking locking escalation on the affected tables, or, if absolutely necessary, on the query itself, via table hints.

A quick way to make use of this view to troubleshoot a single query is to use this pattern:

SET XACT_ABORT ON

BEGIN TRANSACTION

/* place the query to be troubleshooted here */

UPDATE PumpkinTable

SET PumpkinName = 'Big Red Pumpkin'

WHERE PumpkinCode = 'PKN'

/* end of query to be troubleshooted */

SELECT * FROM sys.dm_tran_locks WHERE request_session_id = @@SPID

ROLLBACK

This simple pattern allows us to quickly check what locks are being requested just for our given query. It also avoids committing any operations to the database so we can repeat the process between interventions.

Note that troubleshooting deadlocks is another conversation altogether.

sys.dm_os_wait_stats

This management view can tell us about resource, queue and external waits that were encountered by finished threads. This is an aggregate view so it shows us totals for pretty much everything since the server was restarted or a manual reset was issued. Because of that, and unlike sys.dm_trans_locks, it does not allow us to filter on a specific session. It is therefore better used for general troubleshooting of abnormally slow servers.

Now if we really, really, really want to evaluate wait statistics for a particular query, we can use a pattern in the same fashion of sys.dm_trans_locks:

/* this clears the wait stats */

DBCC SQLPERF ('sys.dm_os_wait_stats', CLEAR);

SET XACT_ABORT ON

BEGIN TRANSACTION

/* place the query to be troubleshooted here */

UPDATE PumpkinTable

SET PumpkinName = 'Big Red Pumpkin'

WHERE PumpkinCode = 'PKN'

/* end of query to be troubleshooted */

SELECT * FROM sys.dm_os_wait_stats

WHERE waiting_tasks_count > 0

ORDER BY wait_time_ms DESC

ROLLBACK

Note that, while this quick-and-dirty approach might appear easy, it has a couple of deep flaws, namely:

- It needs to clear the aggregated stats. This is a global reset. That means they will be cleared for everyone else who might be interested in them too, namely your friendly DBA. It is therefore safer to only do this in our testing servers as opposed to production ones. There are other, less intrusive, ways of performing this analysis on a production server - such as using a temporary table to store interim differential results - so I recommend you research those instead.

- Depending on whatever else is happening on the machine, wait statistics can vary greatly from one execution to another. For example, on a desktop machine, Windows Superfetch might decide to start caching some data, making disk waits spike for no apparent reason. On a server, a varying number of other queries might be running during each execution of ours. It is therefore more reliable, if possible, to run the query multiple times and then calculate average waits per execution.

Got it! What’s Next?

We now looked at some puzzle pieces we need to keep in our troubleshooting repertoire. In the next few pumpkins, we’re going to take a look at some different ways of capturing query plans, before we proceed to “understand how to understand” those plans.